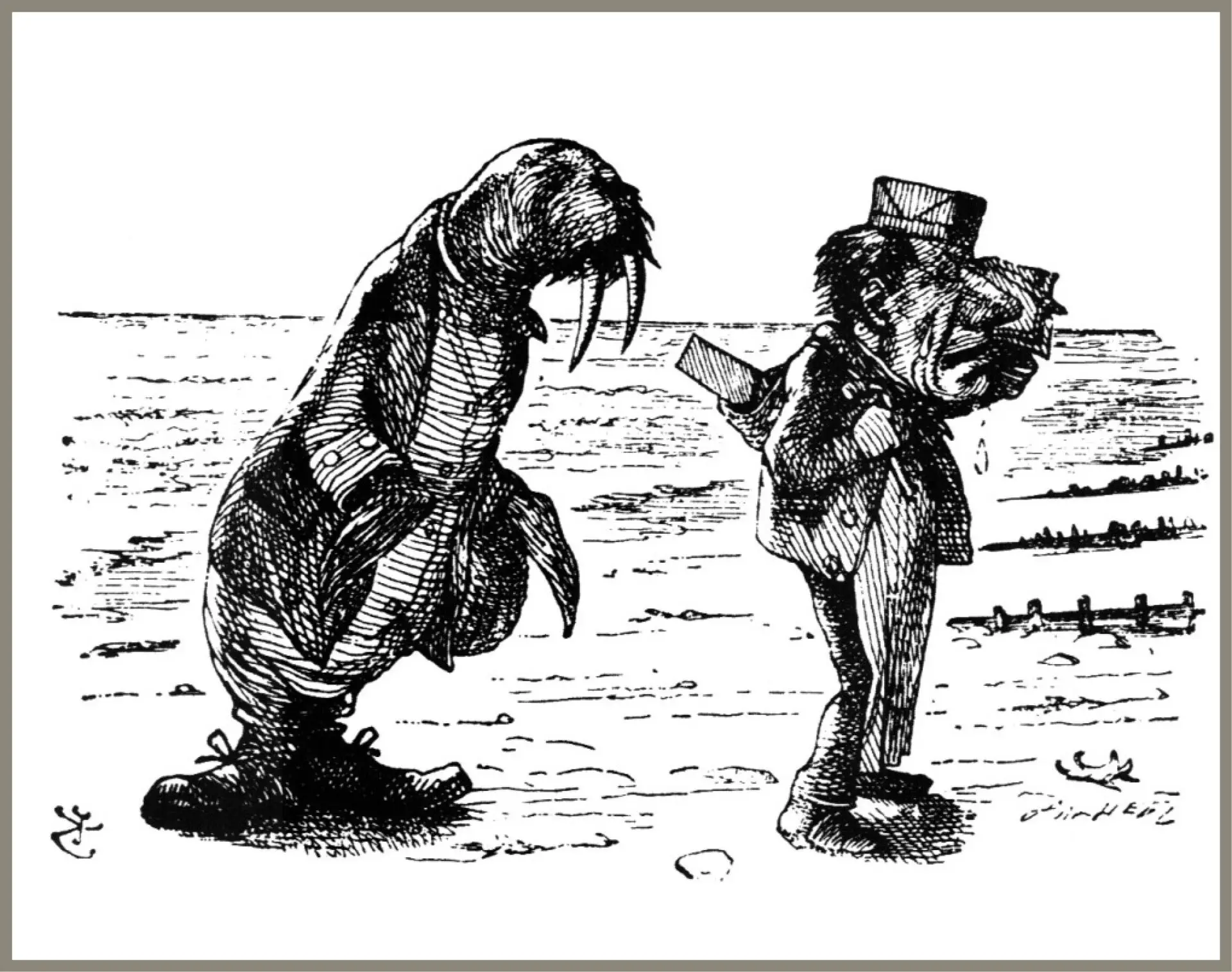

(Featured image: Remix of Джон Тенниел, Public domain, <https://creativecommons.org/licenses/by/2.0>, via Wikimedia Commons)

“The time has come,”

the Walrus said,

“To talk of many things:

Of shoes—and ships

—and sealing wax

—Of cabbages—and kings…

‘The Walrus and The Carpenter’, Alice’s Adventures in Wonderland/Through The Looking Glass, Lewis Carroll. Illustration – John Tennille.

The time has come to talk of camera sensors and mega-pixels.

‘When I use a word,’ Humpty Dumpty said in rather a scornful tone, ‘it means just what I choose it to mean — neither more nor less.

‘Through The Looking Glass’, Lewis Carroll

One word: pixel.

Scene

The following is in the style/format of a screenplay. Ok, let’s face it. Not the sort of thing that would be picked up by Netflix. But… there are precedents. Consider the oyster… Lewis Carroll [aka Charles Lutwidge Dodgson] wrote Alice In Wonderland and Through the Looking Glass. He was a polymath – author, poet and mathematician. While ostensibly written for children, his books also include philosophical discussions on such topics as logic, philosophy and the ambiguity of language. And they delight people of all ages.

The inner workings of a digital camera might lend themselves to a similar treatment. It’s all in the presentation… You get the idea. And even camera geeks might find it interesting. Alice In Wonderland was sufficiently engaging that the New Scientist has a piece – Alice’s adventures in algebra: Wonderland solved. It’s a discussion of seismic changes to the approach to algebra. And the 2010 Tim Burton movie Alice In Wonderland with Johnny Depp, Anne Hathaway, Helena Bonham Carter… is a cinematic triumph, appealing to a variety of audiences.

So this establishes the precedent, sets the scene, as it were, for presenting concepts about digital cameras in the form of a screenplay.

In the scene two people are talking about digital cameras, while they sit side-by-side at a café. The second person has a laptop, arranged so they both can see. Stage directions are in [brackets]. An ellipsis […] indicates when the other person interrupts. Bold text is for emphasis. They use air quotes whenever “quotes” appear..

The person speaking first is in italics.

The scene begins

I know you said you’d bring me up to speed on digital cameras and sensors. But I’ve been looking. And I have a question first. A 40 mega-pixel camera caught my eye. The specs say the number of “effective pixels” is 40 million. Why not just 40 mega-pixels? I know what a pixel is. Everyone knows.

Well yes and no. But a “pixel” has a specific meaning. It’s really all about resolution and sensor size. As for a pixel it’s an element in a grid, which responds to light. As for “pixel”, it’s an abbreviation. Short form for “picture element”.

So it has all the colours then?

Yes, all three RGB channels – red green blue. Unless, of course, it’s monochrome and then only shades of grey from black to white. Like the new, pricey, Leica M11 monochrome. A sensor has sensor elements. Some call them sensels, an abbreviation just like pixel.

[exasperated] You mean pixels. With all the colours.

No. There’s a filter over the sensor so that each element registers only red, blue or green light. So they can record “all” the colours somewhere just not over every sensor element.

So they call them pixels even though they’re not. How do they “see” every colour?.

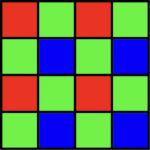

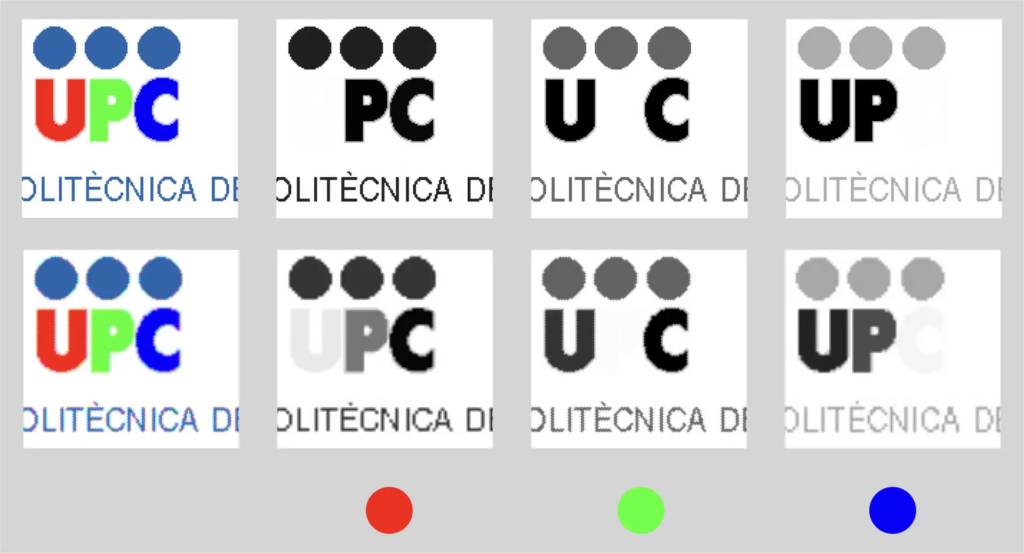

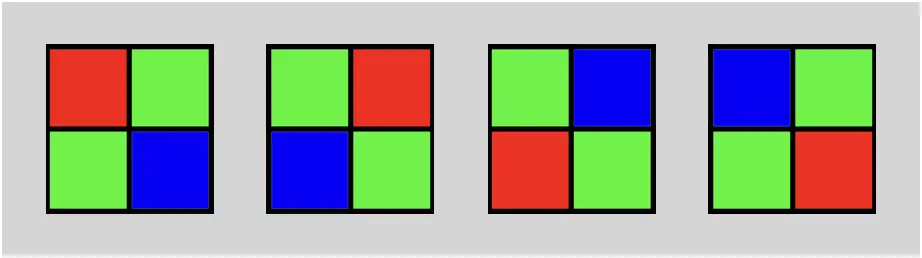

They have a filter over the sensor. It’s made up of elements that look like this arrangement. Here are four of them in a 2 by 2 grid.:

It’s called a Bayer filter. Each square is over a sensor element. The colour of each filter ensures that light from only one RGB channel – colour – reaches the sensor element. It blocks the others.

Why are there so many green squares? And why’s it called a Bayer filter?

Our eyes are more sensitive to green. Especially at night. As for the name, it’s named after Bryce E. Bayer who worked at Kodak. Received a patent in 1975, calling it a “Color Imaging Array”.

1975. So Kodak had a patent then. They were first. But they’re not in the digital space. Now. Why?

Unfortunately Kodak did not pursue digital imaging vigorously, preferring to bank on the cash-cow of film. Bankruptcy followed.

But that would mean that each pixel doesn’t have all the colours. That’s not a pixel. So that’s why they call them “effective pixels”, because they aren’t real…?

Sort of. They are based on incomplete information. Most cameras just say mega-pixels. But some are calling them effective. Hedging their bets, I guess. Being more honest. [rummages] But it has a specific meaning – an element that contributes to the final image. According to Wikipedia, Either directly or indirectly. In this case one channel contributes directly. The others from…

This is confusing. What’s the actual resolution?

Less than stated. Each element has incomplete information. Depends….

Enough talk. Just show me [pause] how the sensor “sees” it..

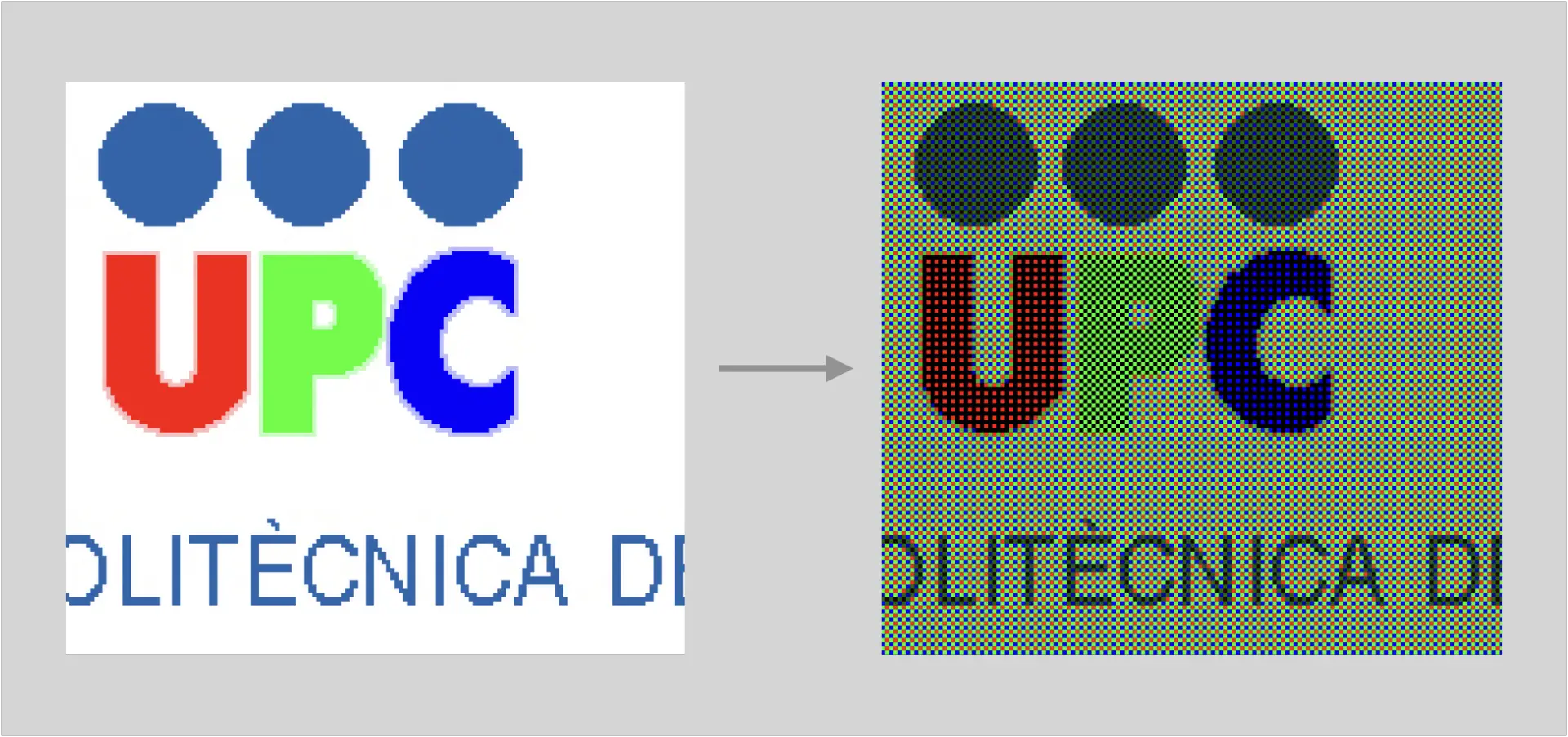

Here’s a small part of a scene so you can see the pixels. On the top – the scene. On the bottom, the way the sensor sees it. Well after colouring. Each sensor element « sees » it in monochrome and then converts it into colour.

Uggh. But that can’t be the final image. Not even on an old cell. it’s awfully green. Why?

The eye [grunts]. The human eye is more sensitive to green. Unless [stops and grunts again]. Too much info. We see green really well in the dark. Red and blue not so well…

Too dark. Pixelated. Looks screened. Like getting up close and personal with a billboard.

[sighs] Correct. Each element in the final pic has three channels of RGB info. But only direct knowledge – ummm – sees – only one. That’s why it’s so dark.

So it’s like black and white. How would it look in black and white? And each channel…

Thought of that. You may not like it but, let’s try a simpler image. Makes it more obvious. Trust me. Let’s look at the zoomed-in bit.

On the left, the image. Right, what the sensor sees.

The sensor sees the “colour” for each element in grey. Intensity. It’s green because it has more green sensor elements. Well elements that see green, that’s why it’s so green. And it’s dark because it doesn’t have enough info on the other channels.

So what do they do to make it look better?

Proprietary algorithms. Call them part of an “image processing engine”.

In the final image – after processing – each pixel has a value in every RGB channel – one that’s already known – measured directly – the other two computed by interpolation.

Huh, interpolation?

An educated guess.

They fill in the missing bits.

By “reconstructing” the colours. They could average each 2 x 2 grid to make a single pixel. But that would mean lower resolution. A trade-off. Instead, they use info from neighbouring elements to construct a value for each element. It’s actually called “demosaicing”.

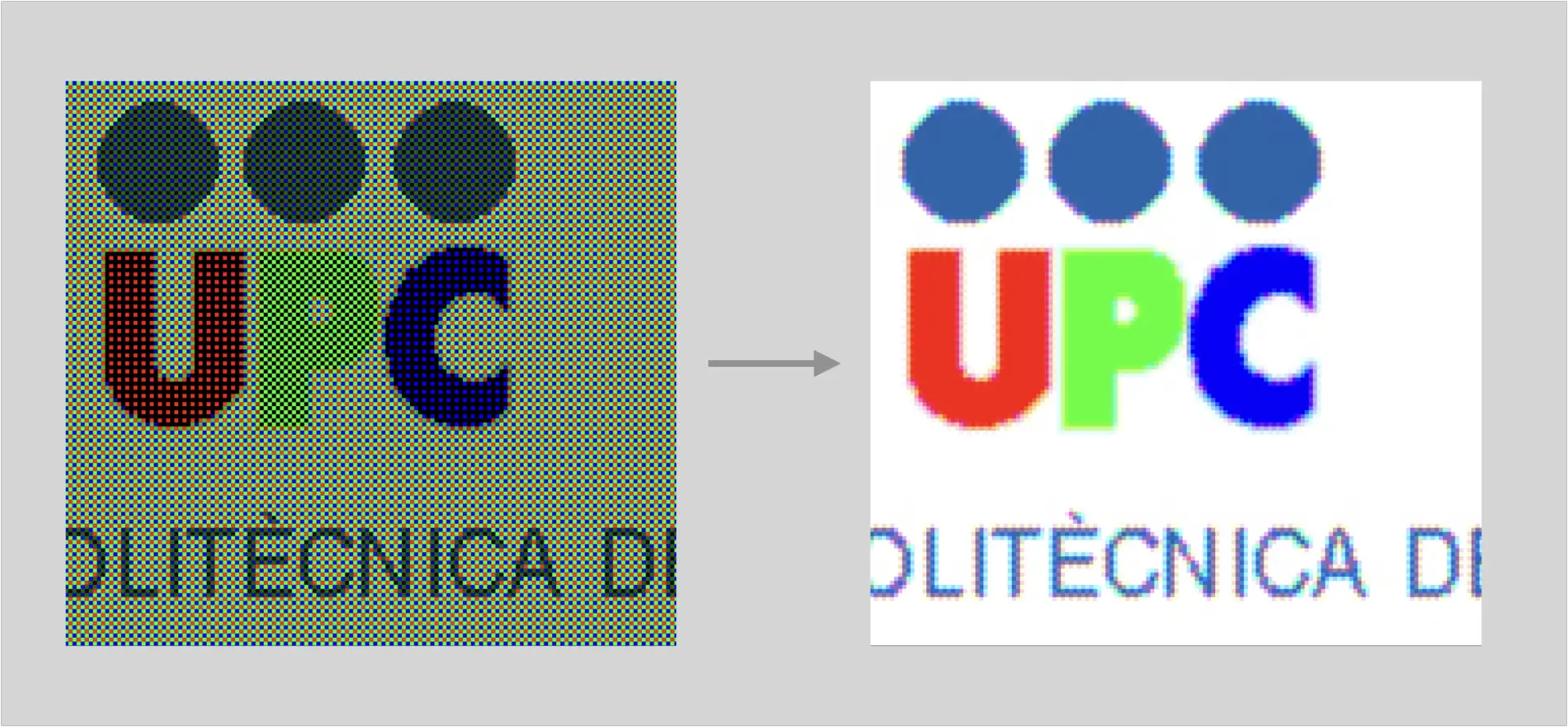

It already looked like a bad mosaic. [sighs] Blotchy. Too dark and too green. So what’s it look like after?

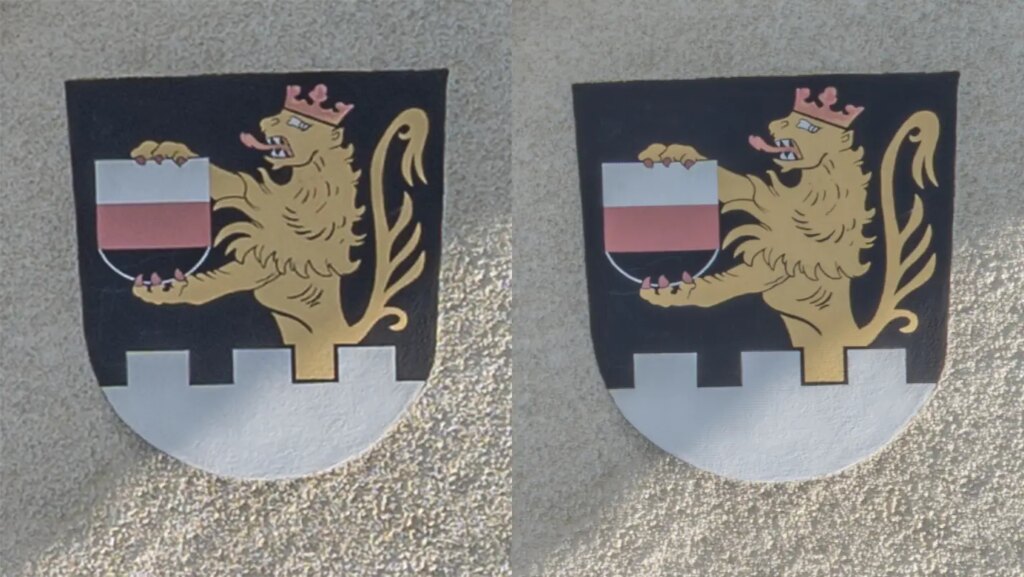

Before and after demosaicing.

But it still fuzzy. Looks way better than the left. But the filtered one still doesn’t look quite right

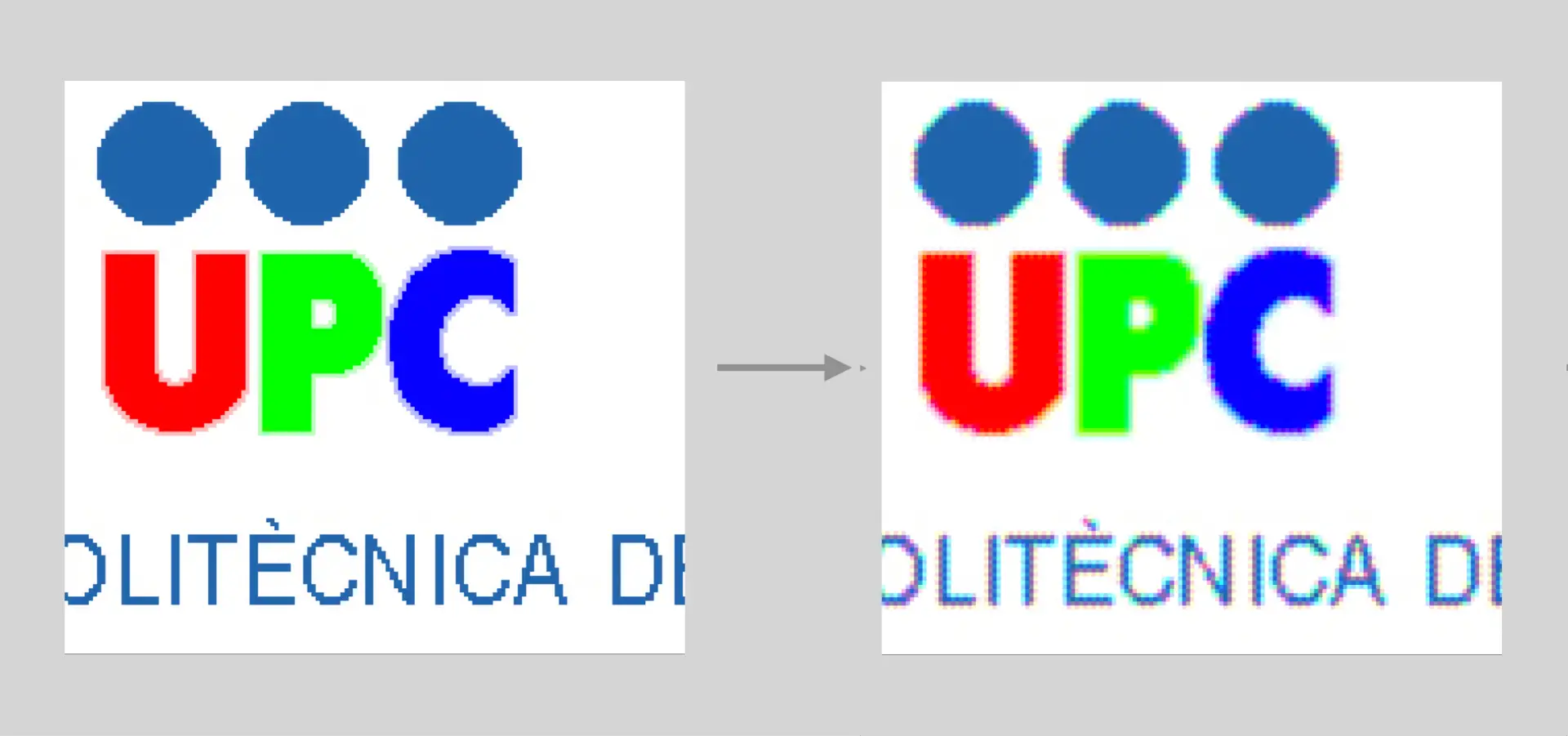

So let’s zoom in even more.

Left: the original image. Right: after demosaicing.

I see some weird stuff. It seems to pulsate – that blue circle. Hovering. Everything seems even more smudged. Red bits everywhere. And jagged text. Everywhere. Colour fringing around the blue dots. And text. Like a bad camera lens. And that thing over the E is red. Thought so.

Agreed. It’s even clearer when we look at the differences between the original and after processing in each channel black and white. That’s the way the sensor actually sees/records the scene.

Top: the original image; bottom, the image after Bayer filter and demosaicing. Grey channels – RGB.

Artefacts show up. Particularly in “U P C”. The U in the red channel and the C in the blue one. They’re faint but there’s no P in the green one, because the sensor records more green info. Pixels near the boundary [corrects] edges don’t have enough info…

Just make the sensor bigger and crop. Still it’ll need work.

Right. They do that. But different cameras “see” the same scene differently. Like film…

Cameras can combine images. Do HDR, just like an iPhone. Same scene different exposures. Combine them. And cameras can deal with camera shake. Image stabilisation. If they move the sensor for that, couldn’t they do something similar to get the colours better?

You’re right. In-body image stabilisation is the result of moving the sensor. To get all three actual RGB channels at each point, just move the sensor a bit at a time in several directions. And, just like HDR, combine images.

Here’s an example. With 4 images. Ensure that a 2×2 grid in the image is covered by sensors in each of these four arrangements:

Or use a larger grid [4 by 4, for example] and do the same thing.

There are twice as many green as red or blue. And an equal number of red and blue. So they’ll have to be combined. I mean the green …

But how many images would we need?

At least 4 to ensure that we have all three RGB channels at that spot. So 4 or 16. Here’s an example of some images at 100 ISO. On the left, the image after demosaicing. The right uses pixel- shifting not demosaicing, made using a 4 by 4 grid overlaying it 16 times. With a resolution four times that on the left. Square root.

Zooming-in shows that the pixel-shifted image is definitely sharper.

Of course it’s sharper. it has more pixels. So the files will be huge. So I’d need one of those expensive pixel-shifting cameras to do super images?

Around 4k€. for the pixel-shifting ones. But you may not need one. Depends on what you want to do with the images.

Gulp. And then there’s that moving sensor – one more part to maintain. And if it gets out of alignment. This all seems [pauses] too complicated. My head hurts.

[scene end]

Conclusion:

Trust this cleared up mega-pixels and digital camera sensors. But… it may have raised even more issues. The point I wanted to make is that there’s more to sensors than meets the eye. And to colour. And to the human eye. If you’re interested in reading more, Cambridge in Colour is good source for more information about colour.

Share this post:

Comments

Neil Mitchell on Of Camera Sensors and Mega-Pixels – A Screen Play

Comment posted: 28/05/2023